- MOOC Basics of managing and sharing research data

- 2.7 Three distinct steps (secure backup, depositing in a repository, long-term archiving)

2.7 Three distinct steps (secure backup, depositing in a repository, long-term archiving)

Section outline

-

-

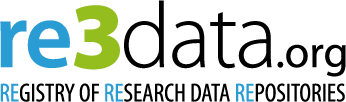

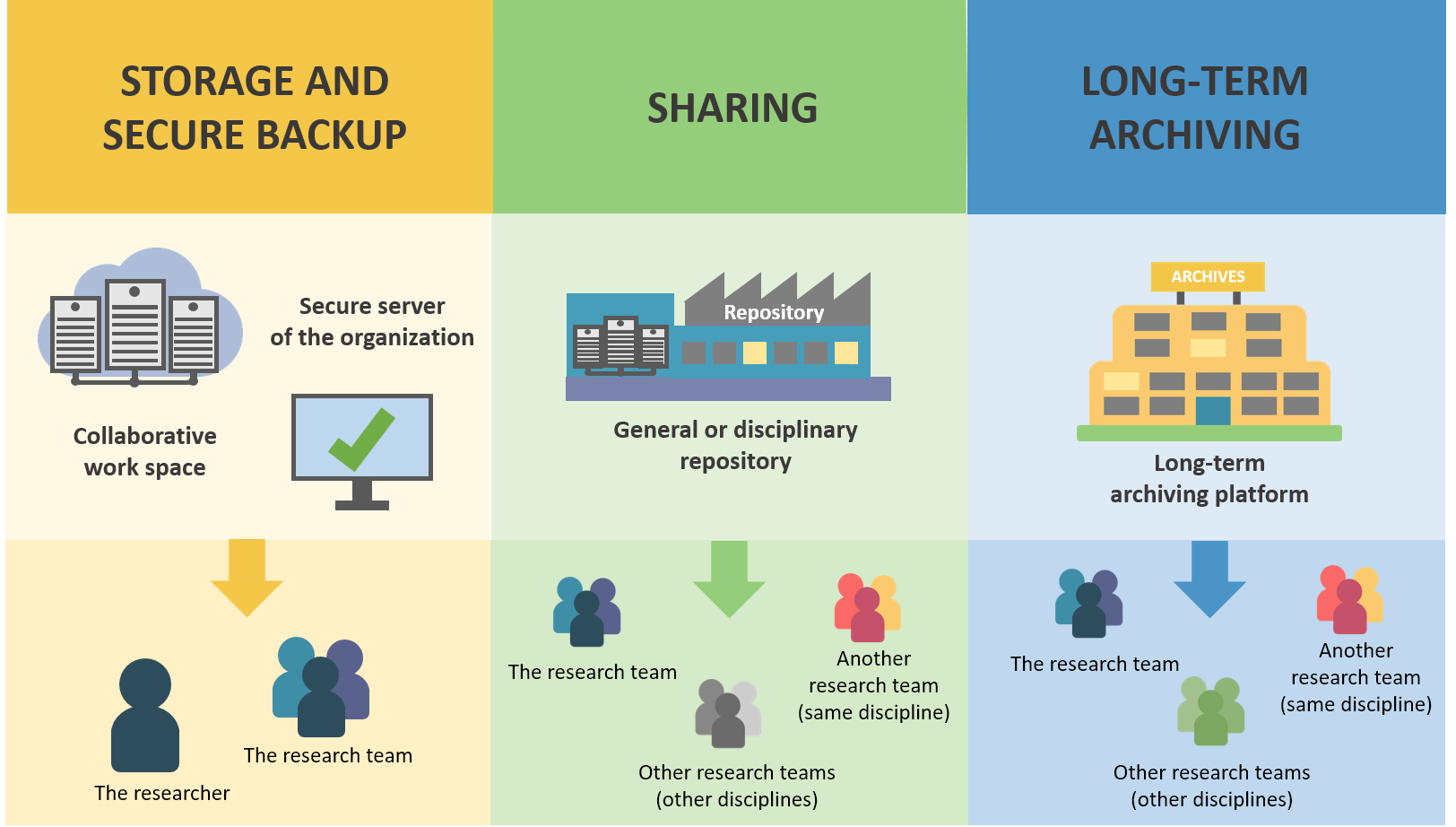

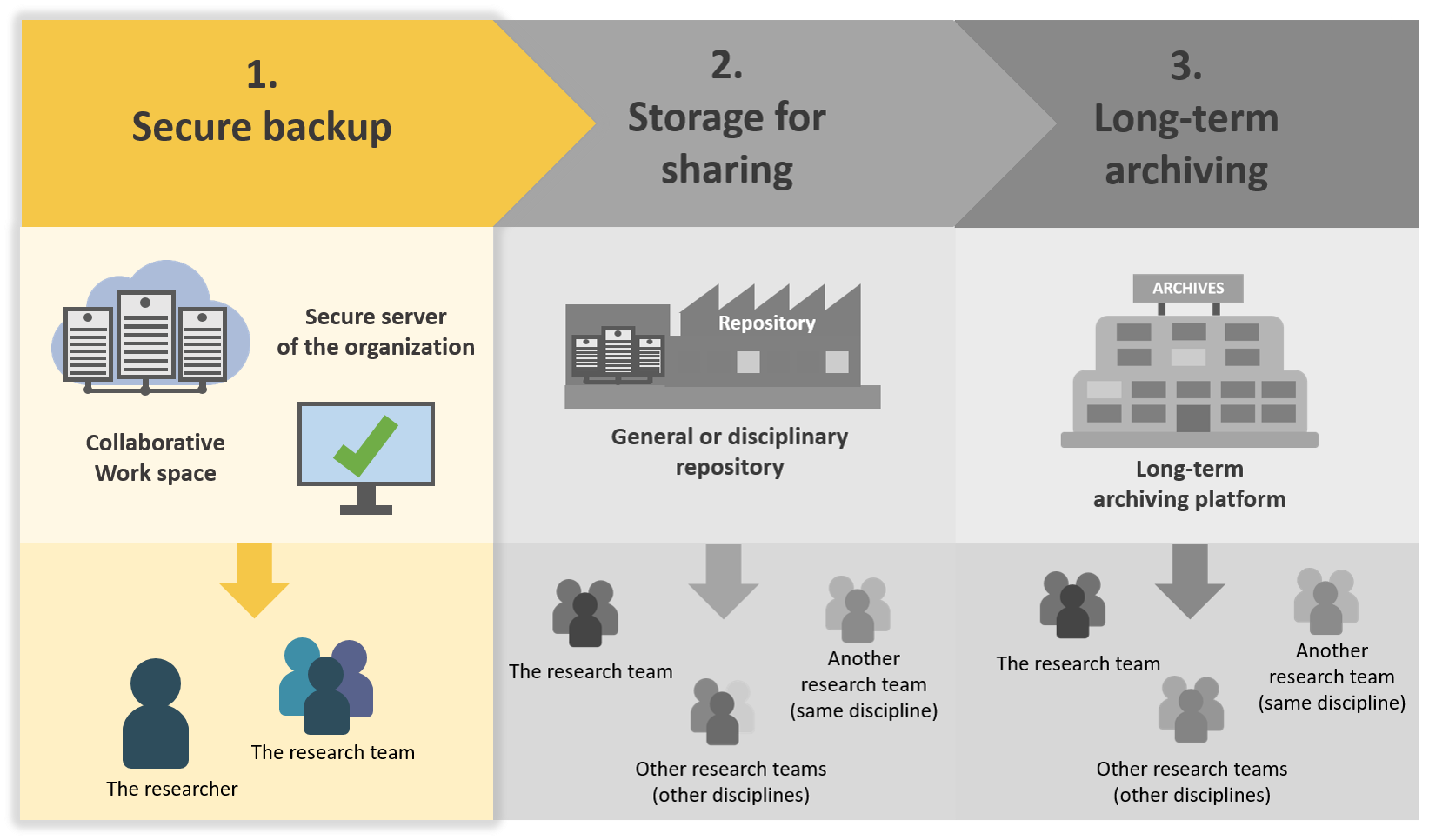

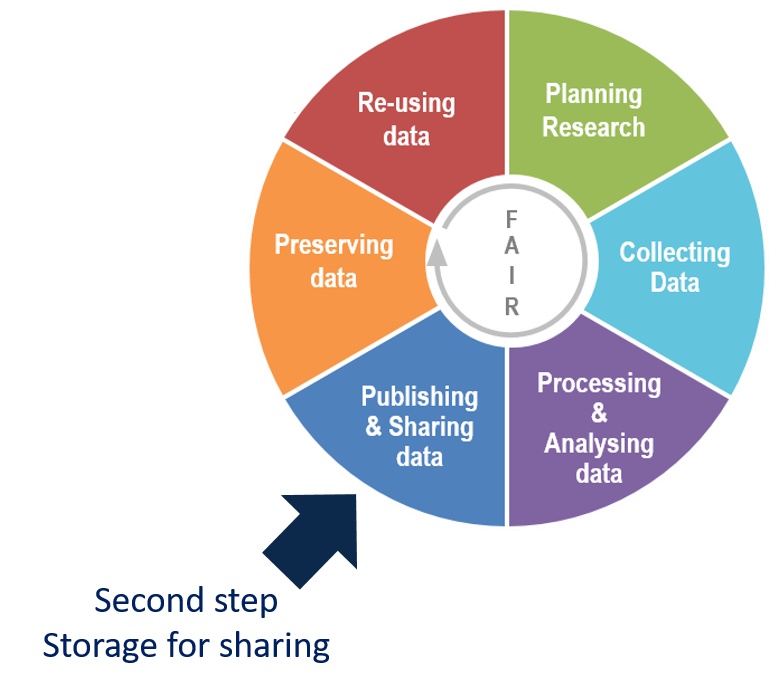

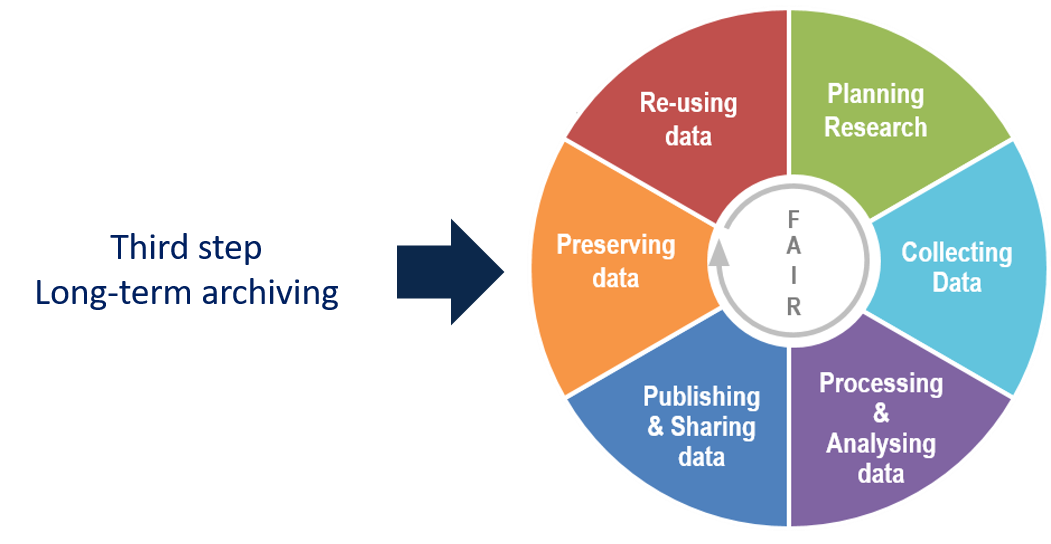

Storage and secure backup, sharing, long-term archiving occur at different steps of the data lifecycle, and have distinct functions.

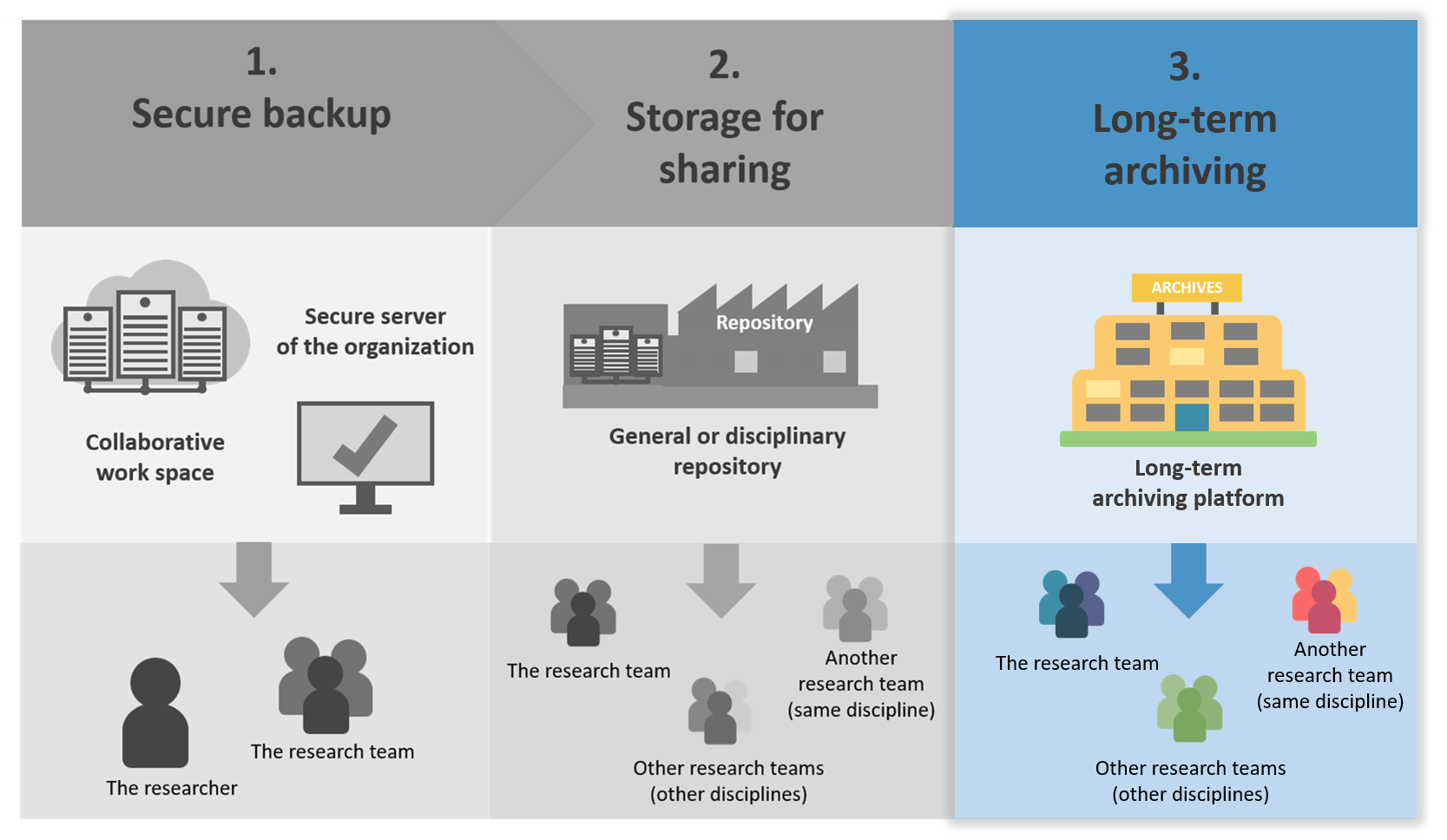

Here is a scheme to understand the difference between these 3 steps:

Source: DoRANum - Stockage, partage et archivage : quelles différences ?

-

1.Storage and secure data backup during the project

The first step is the storage and secure backup of the data during all the project:

The objectives are to:

- ensure data security

- facilitate access for all project collaborators

Storage and secure data backup in the data lifecycle

This concerns the first part of the data life cycle.

Adaptation of Research data lifecycle – UK Data Service

-

Secure data backup measures to be implemented

Efficient backup means duplicating and storing data in different locations on different media in a time frame relevant to the project.

The best is to apply the 3-2-1 rule, which means:

- keep 3 copies of the data,

- anticipate 2 distinct supports or technologies,

- 1 of which is off-site.

In any case, it is necessary to organize and plan these backups, taking care to manage versions. At each step of the project, select the data to be backed up or deleted. The different states of the data are kept in correlation with the different processing steps, allowing to return to a previous version if necessary.

This also requires the choice of a hosting and backup policy adapted to the needs of the project concerning the specificities of data backup (for example in case of sensitive data, big volumes...). This can be on local servers (virtual machines), an institutional cloud with secure access etc.

To improve your knowledge about "durability of storage media", see this infography:

Source: von Rekowski, Thomas. (2018, October). Durability of Storage Media. Zenodo.

Folder structure and file naming

Reliable access requires some rules to organize folder structure and unique and accurate naming of data files:

File Formats

The choice of a format can be guided by:

- the recommendations of an institution,

- the uses of the scientific community of the discipline,

- the software or equipment used.

The ideal is to opt for file formats that are as open as possible (non-proprietary), standardized and durable, for example:

- prefer .csv over .xls

- prefer .odt to .doc

- prefer .jpg over .tif

In any case, it is necessary to mention in the DMP which formats will be used.

-

2. Depositing data in a repository for sharing

This step occurs often after the project (but you can share your data earlier): it is necessary to deposit the datasets in a repository.

A repository allow to storage, access and reuse of data.

The sharing of data in a repository provides a wide access to the scientific community, for a short and medium term (5 to 10 years).

Sharing data in the data lifecycle

Data sharing is often complementary to scientific publication during and after the end of the research project.

Adaptation of Research data lifecycle – UK Data Service

-

How to prepare data according to FAIR principles

The goal is to share the research data of the project in optimal conditions.

All the data must be prepared according to FAIR principles, even if they are shared partially or with restricted access.

The data must be deposited in the chosen repository with metadata, and eventually the source codes necessary for reading and understanding them.Here is a checklist to prepare efficiently the data:

Not all data necessarily needs to be shared. The research team must select the datasets they wish to share and, for each of them, define access modalities.

Check the compatibility and interoperability of data formats, Migrate if necessary to an appropriate, as open as possible format.

Prepare source codes (e.g., scripts) if necessary to read and process the data.

Complete and enrich metadata according to the chosen repository: if not already done, choose a metadata standard, if no suitable standard exists, create a metadata schema, complete the fields for each dataset, following the adopted standard.

To improve your knowledge about "preparing your data collection for deposit", watch this video by UK DATA SERVICE :

How to choose a data repository

There are different categories of repositories:

- publisher-specific

- discipline-specific

- institution-specific

- and multidisciplinary repositories.

Most often, the repository is recommended by the institutions (e.g. the French repository Data INRAE), by funders (e.g. Zenodo recommended by the European Commission) or by the scientific community (e.g. GenBank, Pangaea, Dryad, etc.). It is sometimes imposed by an editor (e.g. Gene Expression Omnibus).

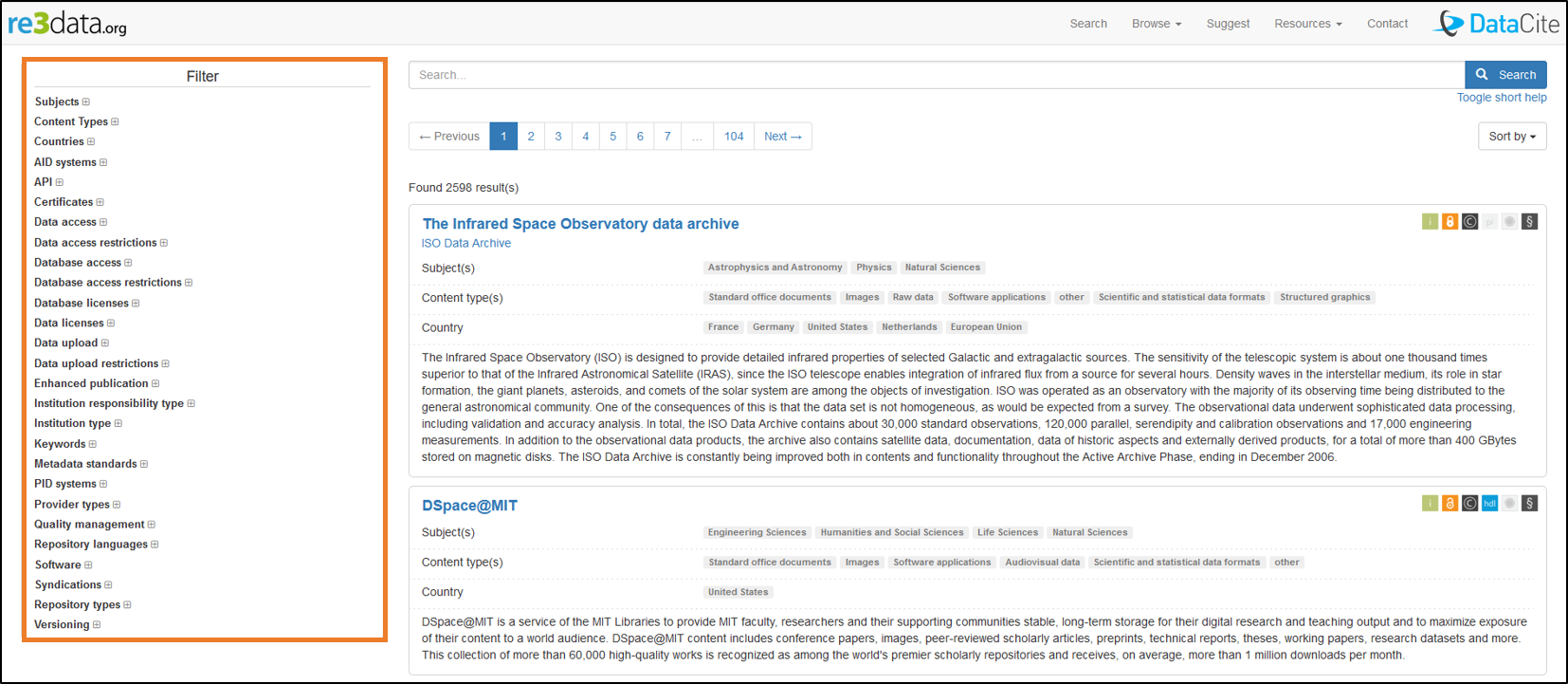

If there is no recommendation, choose it in a directory (e.g. re3data, OAD, OpenDOAR, FAIRsharing, etc.).

In any case, a data librarian can help the research team to choose a relevant repository. -

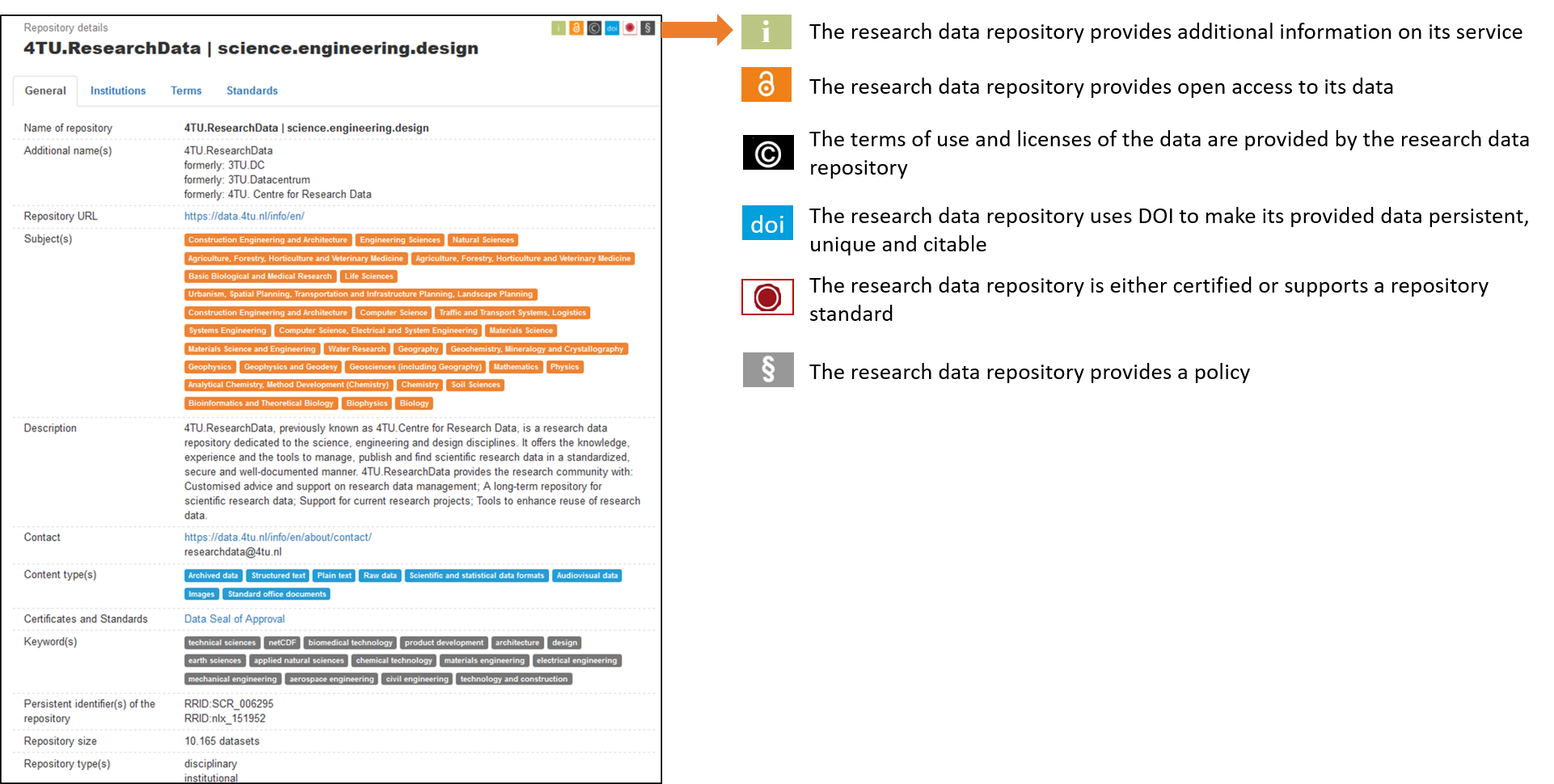

Example of a search in the re3data directory

For each repository, a short descriptive sheets presents

- the subject,

- the type of content,

- the country,

- a small summary,

- icons of the criteria.

Example for the 4TU repository: https://www.re3data.org/repository/r3d100010216

Tip: The search engine Google Dataset Search is also a simple tool to search for data repositories.

-

3. Long-term archiving

Long-term archiving is the ultimate step in saving and storage research data.

Long-term archiving in the data lifecycle

Long-term archiving generally concerns only a part of the data produced by a project. For some projects, it is not necessary to archive data.

Adaptation of Research data lifecycle – UK Data Service

Definition

The question of long-term archiving only concerns data:

- with a scientific value for all the community

- requiring preservation for at least 30 years.

It is an expensive operation that needs an allocated budget. This is the responsibility of the laboratory and not the researcher.

Concretely, long-term digital archiving consists of preserving the document and its content:- in its physical and intellectual aspects,

- over the very long term,

- to be always accessible and understandable.

Long-term archiving services in Europe

At the European level, there are several infrastructures that specifically propose long-term archiving services.

The European Open Science Cloud (EOSC) Portal is an integrated platform that allows easy access to lots of services and resources for various research domains along with integrated data analytics tools. It includes services for long-term archiving, for example:EGI Archive storage: Archive Storage allows you to store large amounts of data in a secure environment freeing up your usual online storage resources. The data on Archive Storage can be replicated across several storage sites, thanks to the adoption of interoperable open standards. The service is optimised for infrequent access. Main characteristics: Stores data for long-term retention; Stores large amount of data; Frees up your online storage.

B2SAFE: this is a robust, safe and highly available EUDAT service which allows community and departmental repositories to implement data management policies on their research data across multiple administrative domains in a reliable manner. A solution to: provide an abstraction layer which virtualizes large-scale data resources, guard against data loss in long-term archiving and preservation, optimize access for users from different regions, bring data closer to powerful computers for compute-intensive analysis.

Selection of data to be archived

To select the data that will be archived for the long term, it is important to consider the value of the data:

- Are the data unique, non-reproducible (or at too high a cost)?

- Do the data have historical value, i.e., do they represent a landmark in scientific discoveries?

- Do the data include changes in processing methods, new standards, or create precedents?

- Do the data support ongoing projects or scientific trends?

- Are the data likely to meet future needs/directions of the scientific community (reuse potential)?

- Are the data likely to be cited or referenced in a publication?

- ...

- The quality and compliance of data collection must be controlled and documented. This may include processes such as calibration, sample or measurement repetition, standardized data capture, data entry validation, peer review...

- Quality, physical integrity of data (undamaged, readable...)

- What is the policy of the funder, the institution?

- Are the data compliant with the institution's strategy?

- Is there a legal or legislative reason to preserve the data?

- Is there an obvious reason why the data might be used in litigation, public inquiries, police investigations, or any report or document that could be challenged in court?

- Are there financial or contractual obligations that require data preservation?

When considering data preservation, the cost of conservation (identified not only as storage, but also management, sharing, access, backup, and long-term data maintenance) must be weighed against evidence of potential data reuse.

Consult the research archives management reference guide, Association of French Archivists, Aurore Section.

Source :- NERC Data Value Checklist. https://www.ukri.org/publications/nerc-data-value-checklist/(opens in a new tab)

- DoRANum : Données de la recherche : apprentissage numérique [En ligne]. France : DoRANum; 2017. Le Référentiel de gestion des archives de la recherche [publié le 13/05/2019]. Disponible(opens in a new tab) i(opens in a new tab)ci(opens in a new tab).

Preparation of the data to be archived

Here is a checklist to prepare your data for long-term archiving:

- Selection of datasets: The datasets (and associated metadata) selected may be different from the shared datasets.

- Volume: Evaluate the volume of data and the necessary budget.

- Data treatment: Treatment of some data may be necessary. For example, personal data requires anonymization.

- File formats: Check the validity of data file formats according to the recommendations of the archive selected.

- Software: Document and perhaps also provide the software used to access the data.

- Metadata: Complete and enrich the metadata if necessary, according to the recommendations of the archive selected.

-

4. Play with the 3 steps of data storage

-

External Resources

- UK Data Service, Delivering quality social and economic data resources

- re3data, Registry of Research Data Repositories

- Google Dataset search, Dataset search engine

- EOSC Portal , European Open Science Cloud

- EGI , Archive storage

- B2SAFE by EUDAT, Long term storage service

- Repository Finder, DataCite

- FAIRsharing, ELIXIR infrastructure

- OpenDOAR, JISC

- Open Access Directory, Simmons University

- Core Certified Repositories, CoreTrustSeal

Study materials

-