Source: https://dataoneorg.github.io/Education//lessons/03_planning/L03_DataManagement_Handout.pdf

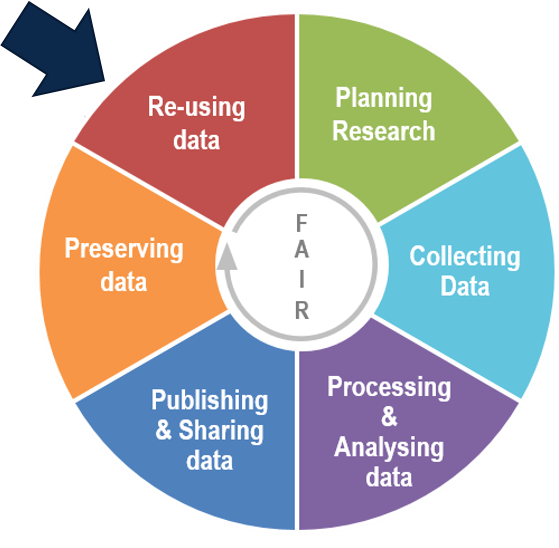

MOOC Basics of managing and sharing research data

Section outline

-

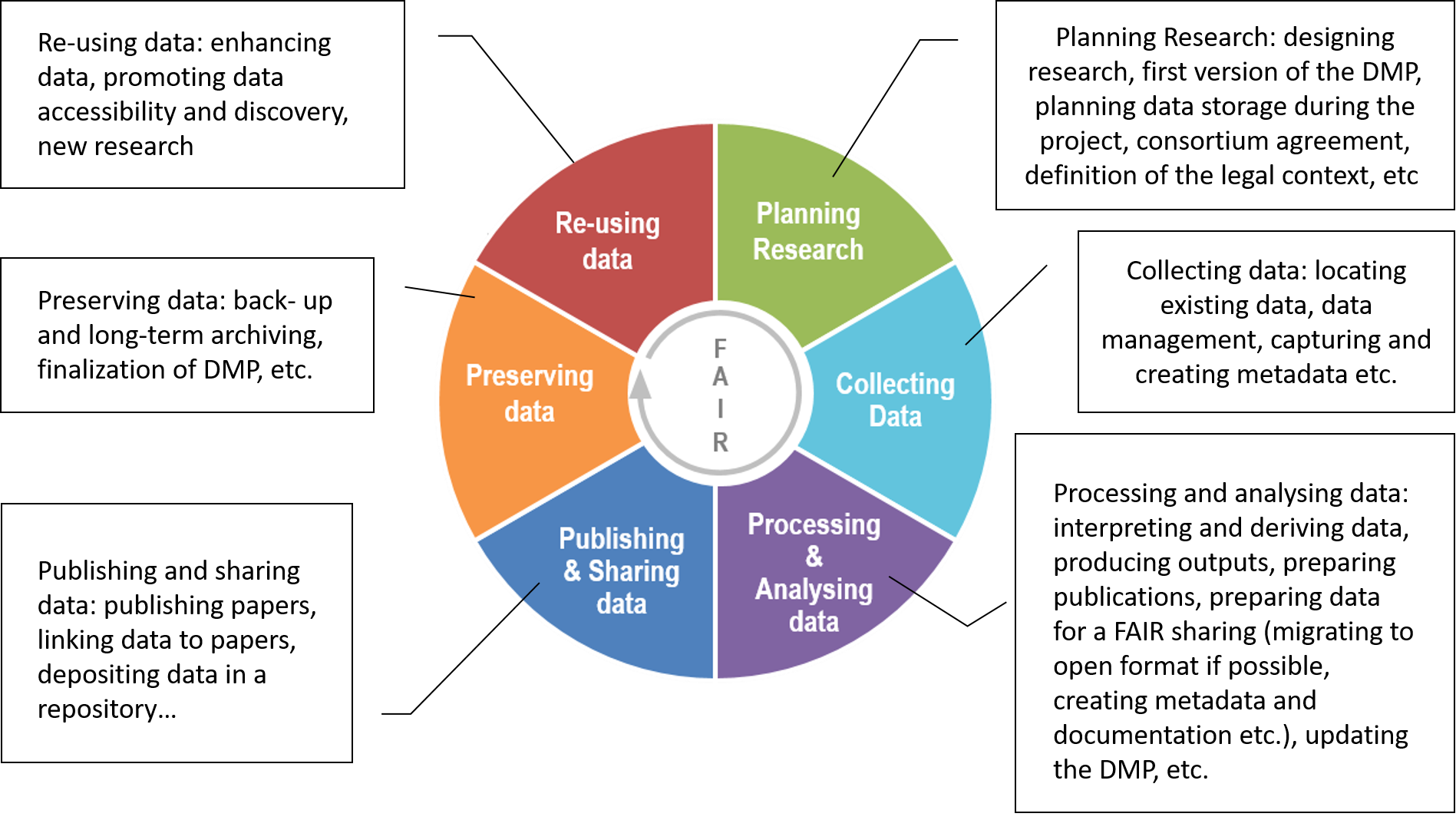

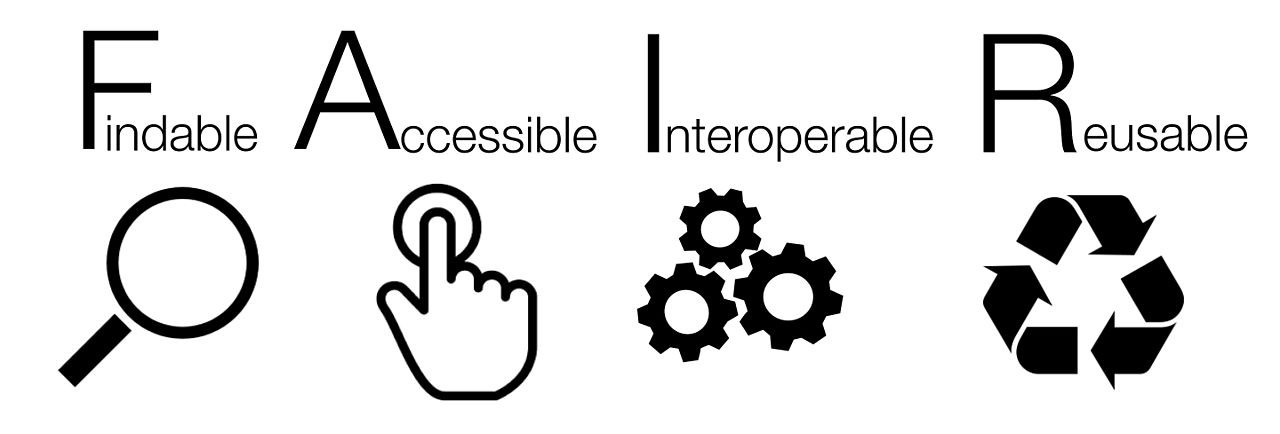

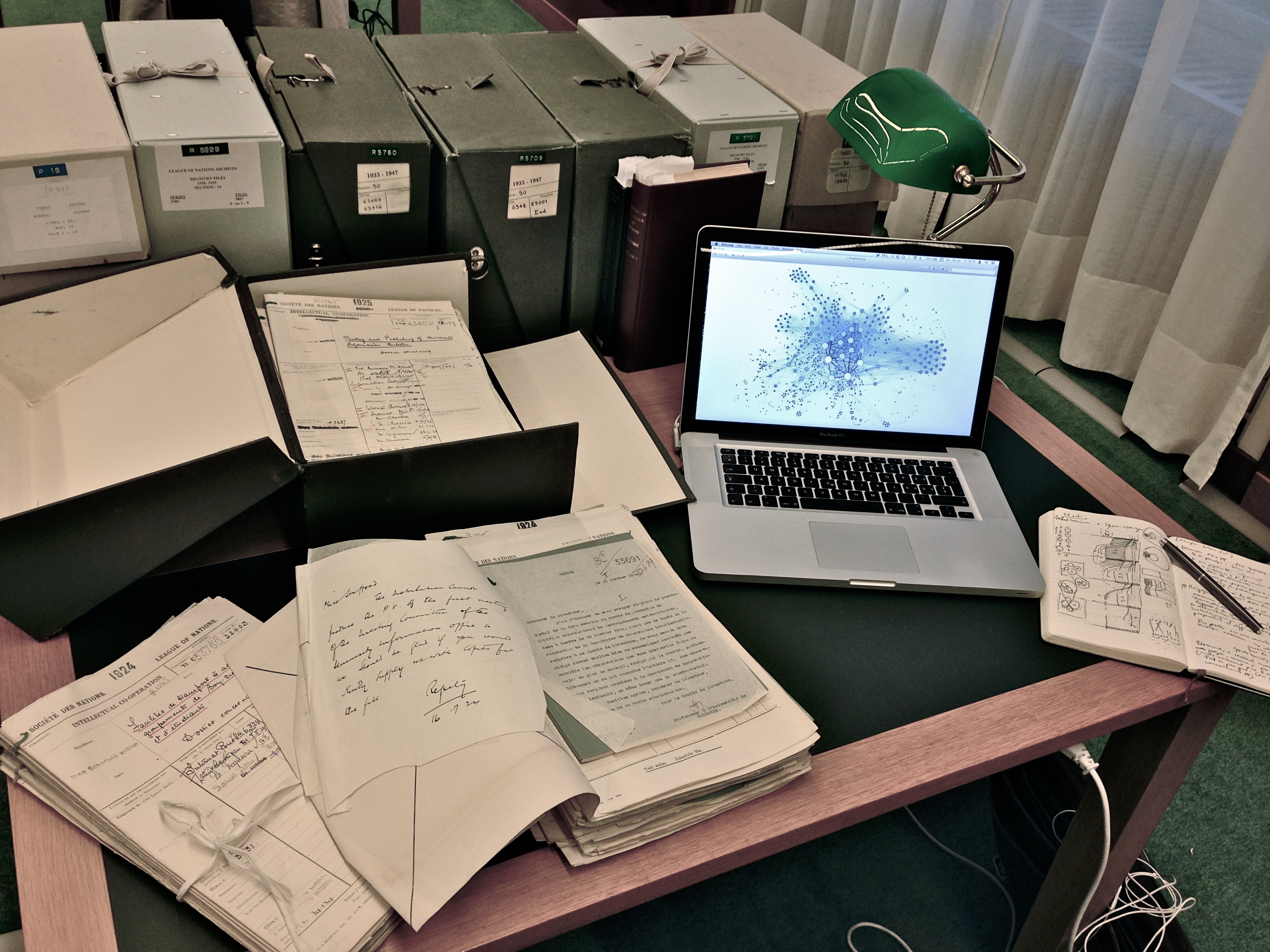

The scientific world has embrace digital technology in its research, publication and communication practices. It is now technically possible to open up science to the greatest number of people, by providing open access to publications and - as far as possible - to research data.

This course introduces you to the challenges of Research Data Management and sharing (RDM) in the context of Open Science (OS).

It was created within the framework of the Erasmus+ Oberred project in 2019. Other courses from the Oberred project are available on this platform.

OBERRED project

This course was carried out in the context of the Oberred project, co-funded by the Erasmus+ Programme of the European Union.

Oberred is an acronym for Open Badge Ecosystem for the Recognition of Skills in Research Data Management and Sharing. The aim of the Oberred project is to create a practical guide that includes the technical specifics and issues of Open Badges, roles and skills related to RDM, and principles for the application of Open Badges to RDM.

Find out more about the Oberred project here: http://oberred.eu/

This course is open access!

No account creation or registration is required, however you will only be able to browse it in read-only mode.

To participate in the activities (exercises, forum...) and get the badge(s), you must register for the course.

Register for the course For optimal use of this course, we recommend using the Google Chrome browser.

For optimal use of this course, we recommend using the Google Chrome browser.

By Calvinius — Personal work:

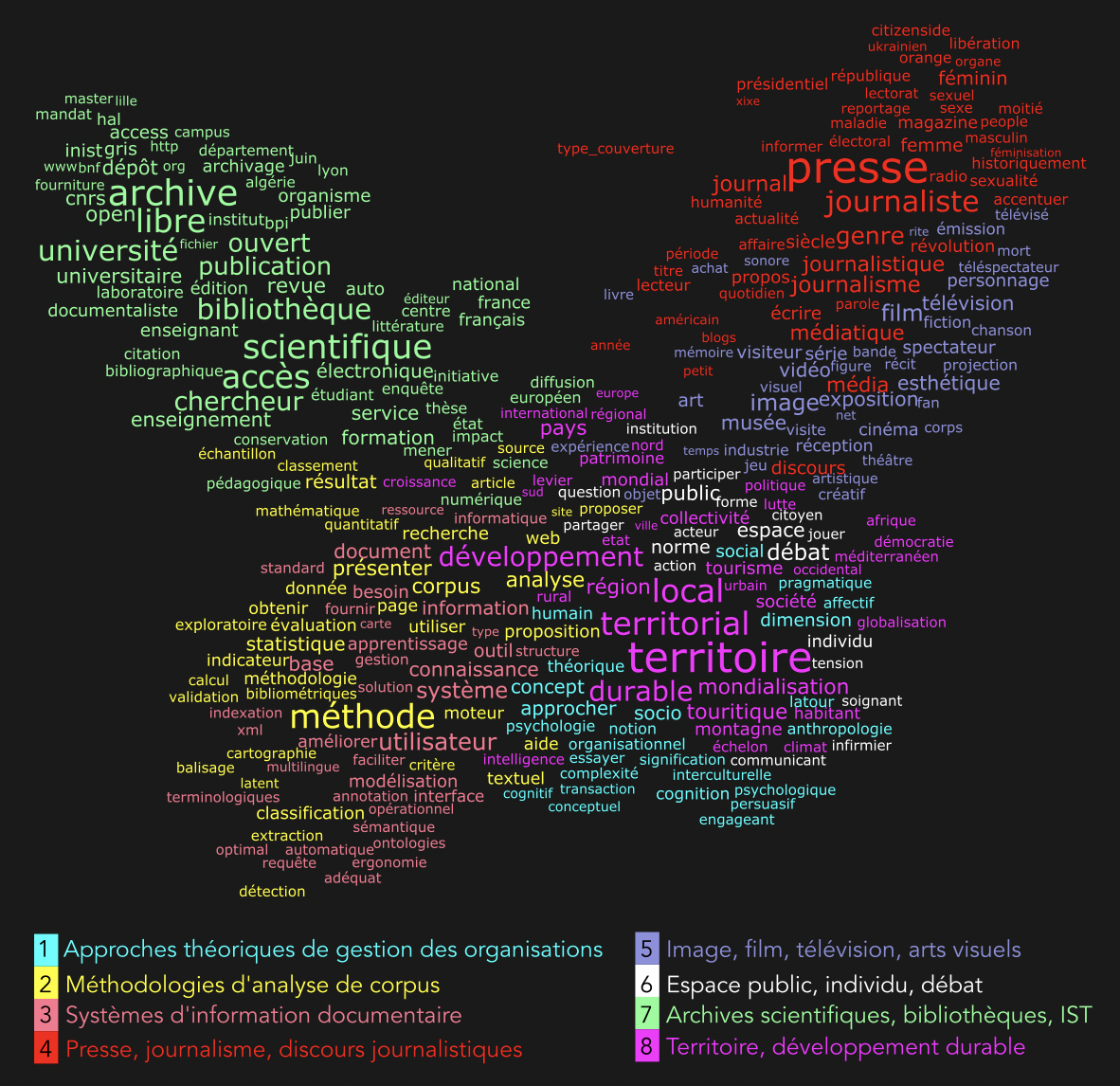

By Calvinius — Personal work:  Gabriel Gallezot, Marty Emmanuel. Le temps des SIC. MIÈGE, Bernard, PELISSIER, Nicolas et DOMENGET. Temps et temporalités en information-communication: Des concepts aux méthodes., L’Harmattan, pp.27-44, 2017,

Gabriel Gallezot, Marty Emmanuel. Le temps des SIC. MIÈGE, Bernard, PELISSIER, Nicolas et DOMENGET. Temps et temporalités en information-communication: Des concepts aux méthodes., L’Harmattan, pp.27-44, 2017,